1. 포켓몬스터 분류

Train Data : https://www.kaggle.com/datasets/thedagger/pokemon-generation-one

Pokemon Generation One

Gotta train 'em all!

www.kaggle.com

Validation Data : https://www.kaggle.com/hlrhegemony/pokemon-image-dataset

Complete Pokemon Image Dataset

2,500+ clean labeled images, all official art, for Generations 1 through 8.

www.kaggle.com

2. 필요한 Datasets 다운로드 및 압축풀기

import os

os.environ['KAGGLE_USERNAME'] = '사용자명'

os.environ['KAGGLE_KEY'] = '키값'▶ 다운로드 된 kaggle.json파일을 열어보시면 해당 값들이 적혀 있습니다. 값을 넣어서 다운로드 받으면 됩니다.

2-1. 다운로드

!kaggle datasets download -d thedagger/pokemon-generation-one

!kaggle datasets download -d hlrhegemony/pokemon-image-dataset

2-2. 압축풀기

!unzip -q pokemon-generation-one.zip

!unzip -q pokemon-image-dataset.zip

2-3. 폴더명 이름 변경

# train폴더 생성 후 dataset 파일을 복사

!mv dataset train

# 데이터가 중복되어 있으므로 해당 폴더및내부파일 삭제

!rm -rf train/dataset

# validation폴더 생성 후 images 파일을 복사

!mv images validation3. train, validation 폴더 내부 확인

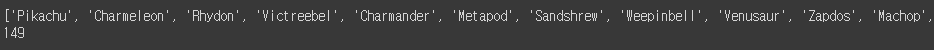

3-1. train

train_labels = os.listdir('train')

print(train_labels)

print(len(train_labels))

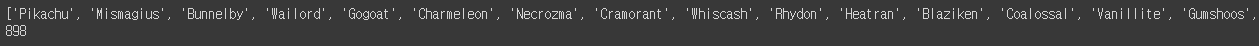

3-2. validation

val_labels = os.listdir('validation')

print(val_labels)

print(len(val_labels))

3-3. validation에서 train에 있는 디렉토리를 확인 후 없는 디렉토리 삭제

import shutil

for val_label in val_labels:

if val_label not in train_labels:

shutil.rmtree(os.path.join('validation', val_label))

3-4. 데이터 확인

▷ validation 확인

val_labels = os.listdir('validation')

len(val_labels)

# 결과값 : 147

▷ train, validation을 확인 후 없는 데이터의 폴더를 출력

for train_label in train_labels:

if train_label not in val_labels:

print(train_label)

os.makedirs(os.path.join('validation', train_label), exist_ok = True)

# 결과값 :

# MrMime

# Farfetchd

▷ MrMime, Farfetchd에 데이터를 추가 후 확인

val_labels = os.listdir('validation')

len(val_labels)

# 결과값 : 1494. 필요한 모듈 임포트

# 필요한 모듈 임포트

import torch

import torch.nn as nn

import torch.optim as optim

import matplotlib.pyplot as plt

from torchvision import datasets, models, transforms

from torch.utils.data import DataLoader

4-1. GPU 사용 변경

# GPU 사용 확인

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

print(device)

# 결과값 : cuda

4-2. 이미지 증강 기법선택

data_transforms = {

'train': transforms.Compose([

transforms.Resize((224, 224)),

transforms.RandomAffine(0, shear=10, scale=(0.8, 1.2)),

transforms.RandomHorizontalFlip(),

transforms.ToTensor()

]),

'validation': transforms.Compose([

transforms.Resize((224, 224)),

transforms.ToTensor()

])

}

4-3. 데이터셋 객체 생성

image_datasets = {

'train': datasets.ImageFolder('train', data_transforms['train']),

'validation': datasets.ImageFolder('validation', data_transforms['validation'])

}

4-4. 데이터로더 생성

dataloaders = {

'train': DataLoader(

image_datasets['train'],

batch_size = 32,

shuffle = True

),

'validation': DataLoader(

image_datasets['validation'],

batch_size = 32,

shuffle = False

)

}

4-5. 생성 후 갯수 확인

print(len(image_datasets['train']), len(image_datasets['validation']))

# 결과값 : 10657 663

4-6. 이미지 출력

# 이미지 4 * 8로 출력

imgs, labels = next(iter(dataloaders['train']))

_, axes = plt.subplots(4, 8, figsize = (20, 10))

for img, label, ax in zip(imgs, labels, axes.flatten()):

ax.set_title(label.item())

ax.imshow(img.permute(1, 2, 0))

ax.axis('off')

4-7. 인덱스 번호의 이름 확인

image_datasets['train'].classes[101]

# 결과값 : Pikachu5. 사전학습된 efficientnet_b4 모델 사용하기

from torchvision.models import efficientnet_b4, EfficientNet_B4_Weights

from torchvision.models._api import WeightsEnum

from torch.hub import load_state_dict_from_url

def get_state_dict(self, *args, **kwargs):

kwargs.pop("check_hash")

return load_state_dict_from_url(self.url, *args, **kwargs)

WeightsEnum.get_state_dict = get_state_dict# 사전 학습된 EfficientNetB4 모델 사용하기

model = efficientnet_b4(weights=EfficientNet_B4_Weights.IMAGENET1K_V1)

model = efficientnet_b4(weights="DEFAULT").to(device)6. FC layer 지정

for param in model.parameters():

param.requires_grad = False

model.classifier = nn.Sequential(

nn.Linear(1792, 512),

nn.ReLU(),

nn.Linear(512, 149)

).to(device)7. 수정 후 학습하기

optimizer = optim.Adam(model.parameters(), lr=0.001)

epochs = 10

for epoch in range(epochs):

for phase in ['train', 'validation']:

if phase == 'train':

model.train()

else :

model.eval()

sum_losses = 0

sum_accs = 0

for x_batch, y_batch in dataloaders[phase]:

x_batch = x_batch.to(device)

y_batch = y_batch.to(device)

y_pred = model(x_batch)

loss = nn.CrossEntropyLoss()(y_pred, y_batch)

if phase == 'train':

optimizer.zero_grad()

loss.backward()

optimizer.step()

sum_losses = sum_losses + loss.item()

y_prob = nn.Softmax(1)(y_pred)

y_pred_index = torch.argmax(y_prob, axis=1)

acc = (y_batch == y_pred_index).float().sum() / len(y_batch) * 100

sum_accs = sum_accs + acc.item()

avg_loss = sum_losses / len(dataloaders[phase])

avg_acc = sum_accs / len(dataloaders[phase])

print(f'{phase:10s}: Epoch {epoch+1:4d}/{epochs} Loss:{avg_loss:.4f} Accuracy: {avg_acc:.2f}%')8. 학습된 모델 내용 파일로 저장하기

torch.save(model.state_dict(), 'model.pth')

8-1. 파일 복원하기

model.load_state_dict(torch.load('model.pth'))

# 평가보드로 전환

model.eval()9. validation에서 선택한 분류가 잘 되었는지 확인

from PIL import Image

img1 = Image.open('./validation/Ditto/0.jpg')

img2 = Image.open('./validation/Charmander/0.jpg')

_, axes = plt.subplots(1, 2, figsize = (12, 6))

axes[0].imshow(img1)

axes[0].axis('off')

axes[1].imshow(img2)

axes[1].axis('off')

plt.show()

10. 이미지를 텐서형으로 변경

img1_input = data_transforms['validation'](img1)

img2_input = data_transforms['validation'](img2)

print(img1_input.shape)

print(img2_input.shape)

# 결과값 :

# torch.Size([3, 224, 224])

# torch.Size([3, 224, 224])11. 이미지 2개를 batch 묶기

test_batch = torch.stack((img1_input, img2_input))

test_batch = test_batch.to(device)

test_batch.shape

# 결과값 : torch.Size([2, 3, 224, 224])12. 모델 예측시키기

y_pred = model(test_batch)

y_prob = nn.Softmax(1)(y_pred)

probs, idx = torch.topk(y_prob, k=3)

_, axes = plt.subplots(1, 2, figsize = (15, 6))

axes[0].set_title('{:.2f}% {}, {:.2f}% {}, {:.2f}% {}'.format(

probs[0, 0] * 100,

image_datasets['validation'].classes[idx[0, 0]],

probs[0, 1] * 100,

image_datasets['validation'].classes[idx[0, 1]],

probs[0, 2] * 100,

image_datasets['validation'].classes[idx[0, 2]],

))

axes[0].imshow(img1)

axes[0].axis('off')

axes[1].set_title('{:.2f}% {}, {:.2f}% {}, {:.2f}% {}'.format(

probs[1, 0] * 100,

image_datasets['validation'].classes[idx[1, 0]],

probs[1, 1] * 100,

image_datasets['validation'].classes[idx[1, 1]],

probs[1, 2] * 100,

image_datasets['validation'].classes[idx[1, 2]],

))

axes[1].imshow(img2)

axes[1].axis('off')

plt.show()

'Study > 머신러닝과 딥러닝' 카테고리의 다른 글

| [머신러닝과 딥러닝] 21. 전이 학습(에일리언 VS 프레데터 데이터셋 활용) (1) | 2024.01.11 |

|---|---|

| [머신러닝과 딥러닝] 20. 간단한 CNN 모델 만들기 + MNIST 분류하기 (1) | 2024.01.10 |

| [머신러닝과 딥러닝] 19. CNN 기초 (0) | 2024.01.10 |

| [머신러닝과 딥러닝] 18. 비선형 활성화 함수 (0) | 2024.01.10 |

| [머신러닝과 딥러닝] 17. 딥러닝(AND, OR, XOR 게이트) (1) | 2024.01.10 |